Today we're going to learn a great machine learning technique called document classification. We will use different classifiers such as Naive Bayes, SVM and KNN.

Introduction to document classification

Document classification is an example of Machine Learning (ML) in the form of Natural Language Processing (NLP). By classifying text, we are aiming to assign one or more classes or categories to a document, making it easier to manage and sort. This is especially useful for publishers, news sites, blogs or anyone who deals with a lot of content.

Document classification is an example of Machine Learning (ML) in the form of Natural Language Processing (NLP). By classifying text, we are aiming to assign one or more classes or categories to a document, making it easier to manage and sort. This is especially useful for publishers, news sites, blogs or anyone who deals with a lot of content.

When you will search on the net for document classification, you will find that there are lots of applications of text classification in the commercial world just like email spam filtering is perhaps now the most ubiquitous

Today we will work with ag_news Dataset (link to download).Put another way: "given a piece of text, determine if it belongs to Sports, science and tech, world or Business category".

we will use:

-Python 3

-Jupyter notebook

Here you can find the full notebook.

Load Data

When opening the CSV file, we can see 3 columns which are [category, title, text]. We will be interested in 2 columns which are category and text.

and now we can see our data frame.

then we will replace Nan Values if they exists with space.

I am going to delete title, if you don't want to delete it, you can join title and text to one field like this:

we added a new column called full_text and we will delete the others to keep the memory.

For my case, i just deleted titles.

Now we will enumerate our class names

and also, we will change our category column, from numbers to names.

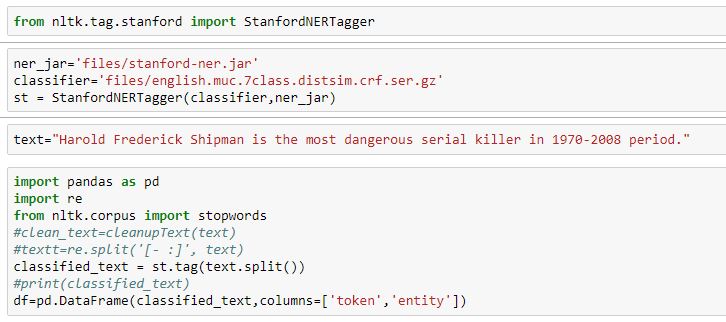

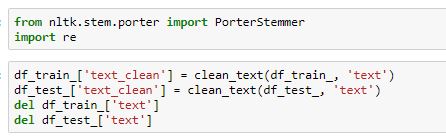

Now everything is ready and we need to begin. First of all we will clean our data and we will stem them. The goal of Stemming is to "normalize" words to their common base form, which is useful for many text-processing apllications especially in our case "document classification".

So, we have this function which keep only-alphanumeric characters and replaces all white space with a single space.

Now, we will convert data frame series to list

Now, we will convert our text to a matrix of features, therefore, we will use scikit-learn countVectorizer and tfidTransformer.

Now we will build our models!

Let's begin with Naive Bayes ( I will try Bernoulli Naive Bayes and Multinomial Naive Bayes)

But before, let's make a confusion matrix function to use it with all the classifiers.

Naive Bayes

Bernoulli Naive Bayes

Let's plot it confusion matrix (we forgot to import numpy before the confusion matrix function, just add import numpy as np)

Multinomial Naive Bayes

SVM

Linear SVM

Non-Linear SVM

KNN

Never hesitate to ask questions if you have :)

NLP is a very important branch of Machine Learning and therefore of artificial intelligence. The NLP is the ability of a program to understand human language.

NLP is a very important branch of Machine Learning and therefore of artificial intelligence. The NLP is the ability of a program to understand human language. Coreference resolution is the task of finding all expressions that refer to the same entity in a text. It is an important step for a lot of higher level NLP tasks that involve natural language understanding such as document summarization, question answering, and information extraction.

Coreference resolution is the task of finding all expressions that refer to the same entity in a text. It is an important step for a lot of higher level NLP tasks that involve natural language understanding such as document summarization, question answering, and information extraction.